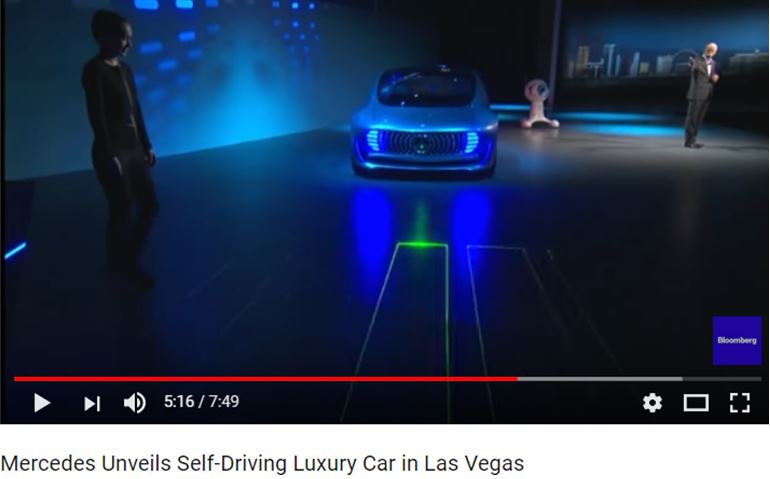

The research/development area of external vehicle communication has been growing and gaining a lot of attention and activity. This could be considered a predictable consequence of thinking-outside-the-vehicle-box in a world inundated with self-driving car tech news coverage. For example, up on the big stage (2015 CES Las Vegas), the CEO of Mercedes Daimler AG unveiled its F015 “Luxury in Motion” concept vehicle and showed how a vehicle might project a crosswalk in front of itself.

So to account for a being but a participant in a larger eco-system, there are many discussions out there forming regarding the interaction of intelligent vehicles (of whatever flavor you fancy: automated, autonomous, etc.) with the “others” now within more direct consideration.

Besides its own internal occupants a computer on wheels has to be instructed/programmed or learn perhaps somehow to interact with everyone else who might be around. Sometimes this group is called VRU for “vulnerable road user” to emphasis the collective disproportionate plight of motorcycles, mopeds, bicycles, pedestrians, etc. who are much more exposed and likely injured/killed/damaged if bumped the wrong way by weapons of mass acceleration.

At a workshop called External Communication Techniques (2017 Road Safety and Simulation International Conference, The Hague) held a few weeks ago, about a dozen different principal investigators and researchers shared their recent progress, and a common theme (from my take) that resulted from their collective research efforts was that natural motion dynamics and arrangements/aspects of the scene situation/context might be sufficient (e.g., information rich enough) kinds of communication. Furthermore, attempting additional (artificial/arbitrary) explicit communication messages did not seem to robustly add benefit/explanatory power to VRU objective (reaction times, behavior) nor subjective experiences (ratings like trust, understanding, etc.).

Additionally, it was discussed that people often claimed (subjectively) that they make heavy use of eye contact, head nods, waves, etc. to negotiate out there on the roads. However, a critical perspective was echoed to acknowledge that subjective and objective reality don’t always match up.

So it seems that while people may from time to time or place to place, certainly might express themselves with various gesticulations, it is worthwhile to admit that for some a flash of the headlights can mean one thing and one thing only as well as for others it might be something else entirely.

Interestingly, in an arguably larger majority of circumstances there in fact can be demonstrated that there is not often a clear direct line of sight of the driver of a vehicle but instead it is obscured/absent or otherwise heavily constrained through time/motions, (sunglasses, night time, glare, etc.). Yet somehow, pedestrians are still capable of negotiating crossing behavior, etc.

How many eyes, faces, heads can you see and interpret?

Looking at the picture above, how many eyes, faces, and heads can you see and interpret? Comparatively, how many cars can you at almost an instant determine are likely to be a conflict risk for you as if you were crossing this street? Parked cars are standing still, turning cars will (attempt) to complete turns, distances and times convey slowing down vs. speeding up cars, cars staying within and following or crossing (curved/straight) lane line markings, etc. Of course we don’t view/perceive in life via separate static sequential snapshots like a camera (although we are often tricked into believing we do). It should be remembered that communication/information then likewise also is generally not discrete but relies on (assumptions) from the before, after, and the around.

Of course, by now it probably should not be surprising to students of human factors, cognitive science, and engineering psychology that the power/relevancy of naturalism of affordances and ecological perspectives and situated cognition all would be pertinent to the external environment and such kinds of “interfacing”. For example, in the century before this, you can find the topics heavily expressed throughout the work of Princetonian J J Gibson. https://en.wikipedia.org/wiki/James_J._Gibson

Overall, my own personal linguistics rooted take on this topic is that the more directed/paired a message is (i.e., from a specific sender to a specific audience) then the more compatible arbitrary symbols can/might be (i.e., because ambiguity is reduced via some standard or common ground that can be assumed/assigned by recognizing/respecting the repertoire of each participant in that communication exchange). Just have a look at the many studies existing out there already trying to get ahead with trust in automation via a foray into inter-human trust formation/communication behaviors and concepts.

Contrastingly, the more open and undirected information/communication is (e.g., whether in a broadcast manner, or even just by coming up at unexpected times/roles from unexpected places) the less conducive is “arbitrary” symbols/signs and the more powerful and relevant is naturalism (i.e., because ambiguity is reduced via context and universality from constrained space/time physics, motion dynamic laws, etc.). Why indeed is it that we say “a picture is worth a thousand words”? Makes me wonder, how much is a movie worth? For example, here is a fine sample of cinematography from JJ Gibson himself: https://www.youtube.com/watch?v=qWhlYvSk5nw

The ambiguous communication open vs. directed conflict/complication is perhaps easily felt if you take the example of an autonomous vehicle telling a pedestrian that is safe for him/her to cross in front, that the autonomous vehicle represents somehow that it is yielding. Well, what if there is more to the puzzle, not one but two, several or even a crowd of people and/or other vehicles … who is the message for, how far does the message extend its relevancy in time and space, etc.?

Comparatively, other more naturalistic physical/motion properties are there that might be enough or helpful to emphasize/highlight/augment such as: is the vehicle slowing down, is it at a stop/stand-still, is it creeping forward, are there other vehicles or objects in or clear of its path, how fast (is being shown/assumed) does this vehicle come down to and/or get up away from stops, which way is it (its front wheels) pointed compared to me, etc.

Consider that numbers, letters, words are “arbitrary” signs/symbols/codes requiring interpretation, and that interpretation requires (knowledge/assumptions of) a specified sender and (knowledge/assumptions of) a specified recipient.

When we walk down the street we don’t just go shouting out streams of arbitrary words, broadcasting our state/intent/perceptions for everyone at once within ear shot to hear (in fact we call this “crazy” or abnormal or off-nominal behavior). However when we walk down the street we do indeed “silently” and “implicitly” and “intuitively” communicate our states/intents/perceptions via our naturalistic motion through the space we take up, our various dynamical postures and accelerations, etc. and through such are already able to successfully negotiate and move about with one another under most circumstances (to me this is all just as much to be fairly considered as information and so the same in that sense as other more explicit verbal forms of communication).

What I find fascinating is that humans know other humans, we have evolved together over millions of years in rather predictable sizes/shapes with rather predictable perception and motion dynamics/capabilities. As variant/variable as we consider ourselves to be, in a bigger gross picture scheme of things … we are relatively quite normative.

Thus, if you consider the classic cut up of “situation awareness”, humans are pretty well equipped/conditioned “for free” to successfully guess at the present perceptions, immediate comprehensions/pre-occupations, and near-future actions of surrounding humans (especially the more proximal the other person might be in size/shape/sensibility and assumed collective human experiences) without a lot of “out loud” explicit signs/symbols kinds of communication.

What is each person seeing, thinking about, and about to do in the next few moments?

I feel an interesting set of (sort of open, yet arguably closed already) question/challenge presents itself when we have different fleets of autonomous vehicles of many shapes/sizes, with different hardware and different software, different brands, different municipal, federal, international licenses and rules, different acceleration/dynamic capabilities, etc.

What becomes most sensible immediately for those lucky humans who (in the near future and transitional time periods) might need to interact with them and in both directed/paired vs. open/broadcast kinds of communication?

Will symbol/signed based (arbitrary) communications prevail? In my opinion this, would probably require a level of common ground/assumption that I fear will be mostly absent until if it ever can be thoroughly and heavily standardized. On the other hand, would more naturalistic physical motion and situation/infrastructure aspects suffice and be beneficial in a majority of contexts?

***

If you are a researcher active/interested in this area, I just heard about a special session being arranged on the interaction between traffic participants during the upcoming 20th Congress of the International Ergonomics Association (2018 Florence, Italy). “Motion as a new Language for Cooperative Automated Vehicles”

“Future vehicles will independently perform complex maneuvers in cooperation with the drivers, neighbouring vehicles and vulnerable road users.

The successful cooperation between road users is the basic condition for the safety and efficiency of a traffic system. Communication between road users is an enabling factor towards cooperative principle.

This session focuses on the potential use of vehicles‘ motion behavior and trajectories as an intuitive and potentially prescriptive language in traffic besides signaling systems and explicitly displayed messages.”

Recent Comments